USP 2020 Inglês - Questões

Abrir Opções Avançadas

Assigning female genders to digital assistants such as Apple’s Siri and Amazon’s Alexa is helping entrench harmful gender biases, according to a UN agency. Research released by Unesco claims that the often submissive and flirty responses offered by the systems to many queries – including outright abusive ones – reinforce ideas of women as subservient.

“Because the speech of most voice assistants is female, it sends a signal that women are obliging, docile and eager‐toplease helpers, available at the touch of a button or with a blunt voice command like ‘hey’ or ‘OK’”, the report said.

“The assistant holds no power of agency beyond what the commander asks of it. It honours commands and responds to queries regardless of their tone or hostility. In many communities, this reinforces commonly held gender biases that women are subservient and tolerant of poor treatment.”

The Unesco publication was entitled “I’d Blush if I Could”; a reference to the response Apple’s Siri assistant offers to the phrase: “You’re a slut.” Amazon’s Alexa will respond: “Well, thanks for the feedback.”

The paper said such firms were “staffed by overwhelmingly male engineering teams” and have built AI (Artificial Intelligence) systems that “cause their feminised digital assistants to greet verbal abuse with catch‐me‐if‐you‐can flirtation”.

Saniye Gülser Corat, Unesco’s director for gender equality, said: “The world needs to pay much closer attention to how, when and whether AI technologies are gendered and, crucially, who is gendering them.”

The Guardian, May, 2019. Adaptado.

Conforme o texto, em relação às mulheres, um efeito decorrente do fato de assistentes digitais reforçarem estereótipos de gênero é

Assigning female genders to digital assistants such as Apple’s Siri and Amazon’s Alexa is helping entrench harmful gender biases, according to a UN agency. Research released by Unesco claims that the often submissive and flirty responses offered by the systems to many queries – including outright abusive ones – reinforce ideas of women as subservient.

“Because the speech of most voice assistants is female, it sends a signal that women are obliging, docile and eager‐toplease helpers, available at the touch of a button or with a blunt voice command like ‘hey’ or ‘OK’”, the report said.

“The assistant holds no power of agency beyond what the commander asks of it. It honours commands and responds to queries regardless of their tone or hostility. In many communities, this reinforces commonly held gender biases that women are subservient and tolerant of poor treatment.”

The Unesco publication was entitled “I’d Blush if I Could”; a reference to the response Apple’s Siri assistant offers to the phrase: “You’re a slut.” Amazon’s Alexa will respond: “Well, thanks for the feedback.”

The paper said such firms were “staffed by overwhelmingly male engineering teams” and have built AI (Artificial Intelligence) systems that “cause their feminised digital assistants to greet verbal abuse with catch‐me‐if‐you‐can flirtation”.

Saniye Gülser Corat, Unesco’s director for gender equality, said: “The world needs to pay much closer attention to how, when and whether AI technologies are gendered and, crucially, who is gendering them.”

The Guardian, May, 2019. Adaptado.

Segundo o texto, o título do relatório publicado pela Unesco – “I´d Blush if I Could” –, no que diz respeito aos assistentes digitais, indica

Assigning female genders to digital assistants such as Apple’s Siri and Amazon’s Alexa is helping entrench harmful gender biases, according to a UN agency. Research released by Unesco claims that the often submissive and flirty responses offered by the systems to many queries – including outright abusive ones – reinforce ideas of women as subservient.

“Because the speech of most voice assistants is female, it sends a signal that women are obliging, docile and eager‐toplease helpers, available at the touch of a button or with a blunt voice command like ‘hey’ or ‘OK’”, the report said.

“The assistant holds no power of agency beyond what the commander asks of it. It honours commands and responds to queries regardless of their tone or hostility. In many communities, this reinforces commonly held gender biases that women are subservient and tolerant of poor treatment.”

The Unesco publication was entitled “I’d Blush if I Could”; a reference to the response Apple’s Siri assistant offers to the phrase: “You’re a slut.” Amazon’s Alexa will respond: “Well, thanks for the feedback.”

The paper said such firms were “staffed by overwhelmingly male engineering teams” and have built AI (Artificial Intelligence) systems that “cause their feminised digital assistants to greet verbal abuse with catch‐me‐if‐you‐can flirtation”.

Saniye Gülser Corat, Unesco’s director for gender equality, said: “The world needs to pay much closer attention to how, when and whether AI technologies are gendered and, crucially, who is gendering them.”

The Guardian, May, 2019. Adaptado.

De acordo com o texto, na opinião de Saniye Gülser Corat, tecnologias que envolvem Inteligência Artificial, entre outros aspectos,

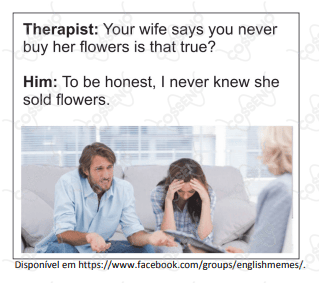

Disponível em https://www.facebook.com/groups/englishmemes/.

O efeito de comicidade que se obtém do meme decorre, sobretudo, da

Você chegou ao fim das questões encontradas